AI agents are gaining traction quickly across an array of business applications. From automating customer support to optimizing supply chains and managing decentralized governance in Web3 platforms, these agents are giving an edge to industries seeking innovation in the digital space. However, with this rapid advancement comes an escalating concern around AI agent security: how do we ensure that AI agents remain secure, trustworthy, and privacy-respecting?

AI agents often handle sensitive data, make autonomous decisions, and interact with other agents or humans in dynamic, high-stakes environments. As these agents grow in complexity and independence, the risks of data breaches, model manipulation, and malicious exploitation also rise.

This is where Zero-Knowledge Proofs (ZKPs) step in as a groundbreaking solution. ZKPs for AI security provide a method to verify the truth of a statement without revealing the actual data behind it. For businesses looking to build robust and trustworthy systems, leveraging expert AI agent development services that integrate ZKPs can be a game-changer.

In this blog, we will explore AI agents, their vulnerabilities, and how to secure AI agents with ZKPs.

The Growing Footprint of AI Agents in the Digital Era

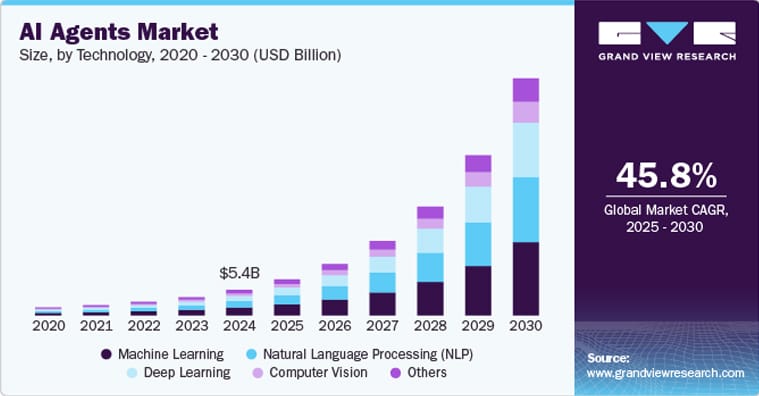

“According to Grand View Research, the global AI agents market size was estimated at USD 5.40 billion in 2024 and is expected to grow at a CAGR of 45.8% from 2025 to 2030.”

Image Source: Grand View Research

The rising demand for automation and personalized customer experience is primarily driving the AI agents industry’s growth. Additionally, Additionally, the widespread adoption of cloud computing has made it easier and cost-effective for businesses to deploy AI agents. Cloud-based platforms enable companies to scale AI agent applications with lower infrastructure investments, driving wider adoption across industries.

There are several reasons why businesses worldwide are increasingly investing in AI agents. However, alongside this rapid adoption, a critical question surrounding AI agent security arises: Are these intelligent agents secure enough to be trusted in real-world business environments? Let’s find out.

Are Today’s AI Agents Secure Enough for Tomorrow’s World?

Despite their growing utility, today’s AI agents face significant security challenges that cannot be overlooked. Several AI systems operate as black-box models, which provide little to no transparency into their decision-making processes. This lack of explainability makes it difficult to understand or verify how an AI agent arrives at a particular conclusion. On top of that, vulnerabilities in data pipelines, training environments, and deployment increase the risk of tampering and exploitation.

According to the 2025 Armis Cyberwarfare Report, which surveyed over 1,800 global IT decision-makers, nearly 74% of respondents identified AI-powered attacks as a significant threat to their organization’s security.

The study highlights the core issue: if AI agents are to become fundamental components in high-stakes fields such as finance, governance, or healthcare, any breach in decision-making logic or data integrity could trigger large-scale failures, cause irreparable reputational harm, or result in significant financial losses.

AI agents must be designed to be auditable, trustworthy, and secure from the ground up. However, the existing AI agent security framework needs more improvements to meet the stringent demands posed by next-generation AI systems.

Understanding the Risks in AI Agent Security

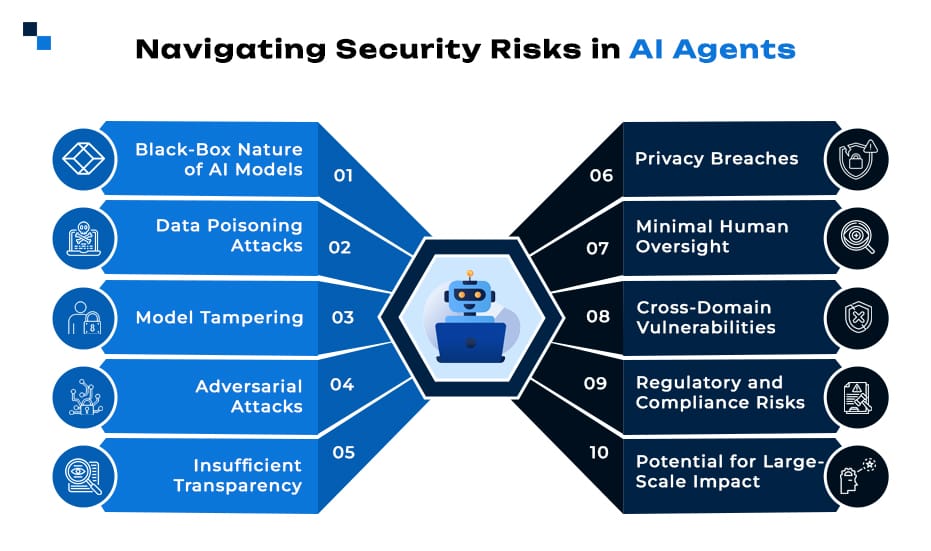

AI agents operate on a complex stack, including data ingestion, model inference, task execution, and communication. Each layer introduces potential attack surfaces, especially when agents are distributed, autonomous, and adaptive. Key risks include:

Black-Box Nature of AI Models

Most AI agents operate as opaque systems. It makes it difficult to interpret or audit how decisions are generated, which increases trust and accountability issues.

Data Poisoning Attacks

Malicious actors can manipulate training data to subtly alter an AI agent’s behavior, causing it to make incorrect or harmful decisions.

Model Tampering

Unauthorized modifications to AI models during development or deployment can compromise the integrity and reliability of the agent’s outputs.

Adversarial Attacks

Attackers can exploit vulnerabilities by feeding specially crafted inputs that deceive AI agents into misclassifying or malfunctioning.

Insufficient Transparency

Without clear explanations or verifiable proofs of computations, it is challenging to validate that AI agents are functioning as intended.

Privacy Breaches

AI agents often access sensitive personal or corporate data, which makes them prime targets for data leakage or unauthorized information exposure.

Minimal Human Oversight

Autonomous AI agents operating without continuous human supervision increase the risk of unnoticed errors or malicious activities.

Cross-Domain Vulnerabilities

AI agents interacting across multiple systems or networks face compounded security challenges from diverse attack surfaces.

Regulatory and Compliance Risks

Lack of verifiable audit trails for AI decisions may lead to non-compliance with data protection and industry regulations.

Potential for Large-Scale Impact

AI agents controlling critical infrastructure, financial systems, or healthcare services pose high risks if compromised, potentially resulting in catastrophic failures.

The above risks raise the need for a mechanism where trust is built into the AI agent itself and allows such smart agents to prove the validity of their actions without revealing private or sensitive data.

So, what’s the solution? Leveraging Zero Knowledge Proof in AI agents.

Introducing Zero-Knowledge Proofs (ZKPs): A Game-Changer for Secure AI Agents

Zero-Knowledge Proofs (ZKPs) are cryptographic protocols that allow one party (the prover) to prove to another (the verifier) that a certain statement is true without revealing any underlying information. Originally developed for securing blockchain transactions, ZKPs have quickly gained momentum across privacy-critical applications.

ZKPs enable AI agents to prove they followed a specific logic, processed inputs correctly, or made decisions based on trusted data, without exposing models, inputs, or proprietary algorithms.

There are two main types of ZKPs: zk-SNarks (Succinct Non-Interactive Argument of Knowledge) and zk-STARKs (Scalable Transparent ARguments of Knowledge).

Gain a deeper understanding of ZKPs and their types here.

When you secure AI agents with ZKPs, you’re not just enhancing privacy, but you’re laying the foundation for trustworthy and accountable AI systems.

How ZKPs Work in AI Agents to Ensure Security

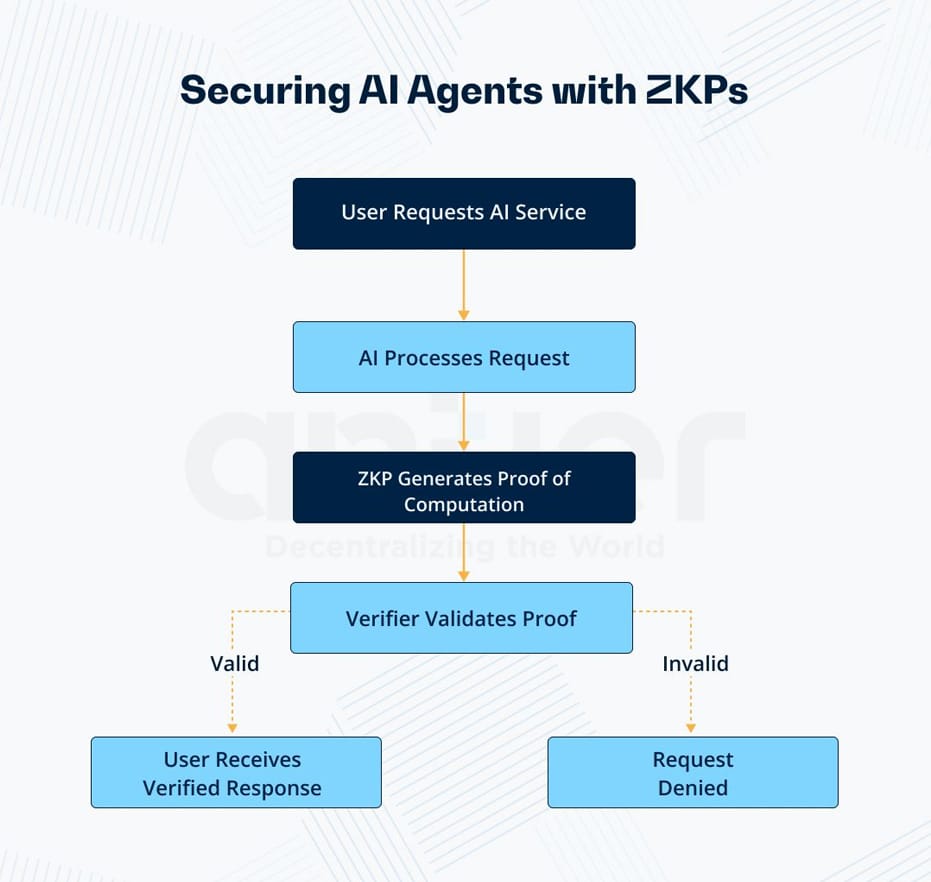

Here’s how ZKP functions step-by-step to maintain integrity and trust in AI agents:

1. User Requests AI Service

A user initiates an interaction with an AI agent, requesting a service, such as data processing, decision-making, or content generation.

2. AI Processes Request

The AI agent performs the requested operation based on its trained models and internal logic, producing an output.

3. ZKP Generates Proof of Computation

Rather than exposing the data or internal logic, the AI agent simultaneously generates a zero-knowledge proof, a cryptographic confirmation that the computation was executed correctly and followed pre-defined rules.

4. Verifies Validated Proof

This proof is sent to a verifier (either another agent, system, or the user). The verifier checks the validity of the proof without accessing sensitive data.

- Valid: If the proof is verified as correct, the user receives a trusted response from the AI.

- Invalid: If the proof fails verification, the request is automatically rejected, preventing tampered or faulty computations from going forward.

This flow ensures secure, verifiable, and privacy-preserving operations, critical for scaling trusted autonomous AI.

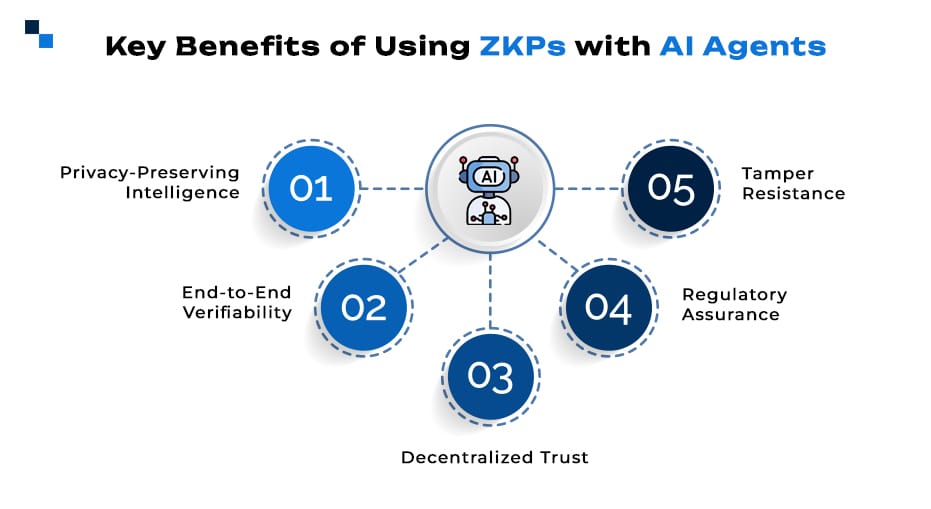

Advantages of Using ZKPs with AI Agents

ZKP in AI agents offers several distinct advantages:

- Privacy-Preserving Intelligence: Decisions can be validated without exposing sensitive models or data.

- End-to-End Verifiability: Every decision or action can be cryptographically verified.

- Decentralized Trust: Eliminates the need for third-party validation in distributed ecosystems.

- Regulatory Assurance: Agents can prove compliance without audits.

- Tamper Resistance: Reduces chances of model inversion, unauthorized modifications, or misbehaviors.

These benefits make ZKPs a compelling choice for sectors like healthcare, finance, supply chains, and DeFi, where AI is expected to operate under strict trust and compliance regimes.

Conclusion: ZKPs are the Key to Trusted AI Autonomy

As AI agents transition from controlled lab environments to real-world applications, their security and trustworthiness cannot be taken for granted. Zero-Knowledge Proofs (ZKPs) offer a breakthrough approach, enabling autonomous agents to validate their decisions, processes, and compliance without exposing proprietary information or compromising privacy.

Antier is a leading AI agent development company, specializing in integrating advanced cryptographic solutions like ZKPs into AI and blockchain frameworks. Whether you’re building AI agents for DeFi, supply chains, or smart cities, our team ensures your solutions are not only intelligent but verifiably secure.

Want to integrate Zero-Knowledge Proofs into your AI pipelines, or looking for advanced AI agent development services and support? Let’s talk. Our team at Antier specializes in building ZK-powered, privacy-first AI applications.

Get in touch for a free consultation.